LicensePlateRecognition

Introduction

- Automatic licence-plate recognition (ALPR), also known as number-plate recognition (ANPR), is a technology that uses optical character recognition on images to read vehicle registration plates to create vehicle location data. It can use existing closed-circuit television, road-rule enforcement cameras, or cameras specifically designed for the task. ALPR is used by police forces around the world for law enforcement purposes, including to check if a vehicle is registered or licensed. It is also used for electronic toll collection on pay-per-use roads and as a method of cataloging the movements of traffic, for example by highways agencies.

Automatic number plate recognition can be used to store the images captured by the cameras as well as the text from the license plate, with some configurable to store a photograph of the driver. Systems commonly use infrared lighting to allow the camera to take the picture at any time of day or night.

In this tutorial, we are going to use the raspberry pi 3B for recognizing licence plate numbers of vehicles using an open source platform called OpenALPR.

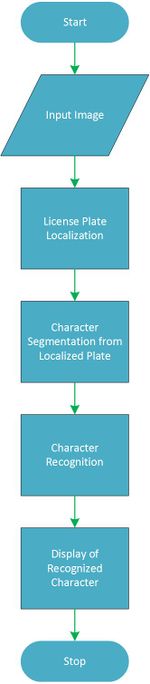

OpenALPR operates as a pipeline. The input is an image, various processing occurs in stages, and the output is the possible plate numbers in the image. OpenALPR makes use of about 3 other libraries:

- OpenCV

- Leptonica

- Tesseract-OCR

In a nutshell, Tesseract/Leptonica are used for OCR. Given a cleaned, cropped character, it tries to recognize the value. OpenCV is a library that provides various computer vision functions. OpenALPR uses these functions to do just about everything else.

Prerequisites

- You should have the Raspberry Pi set up. Go to this site or click here for steps on how to set up the raspberry pi and get it ready for use.

- You should have SSH enabled. To do this in 'headless mode', follow the steps here. Afterwards, you can access the Raspberry Pi using Putty or any SSH software.

Note: 'Headless mode' simply means working without a monitor directly connected to the Raspberry Pi.

- You should be conversant with the Raspberry Pi camera module and have it enabled.

Software Requirements

The following are the software required for the project:

Note: You can choose to download the software by following the links above and copying them to the home directory of the Pi (/home/pi/) if using the pi with a monitor; otherwise, if running the raspberry pi in headless mode, download the zipped files from the links above to the remote PC, unzip the files and copy it into the Raspberry Pi using WinSCP.

Hardware Requirements

The hardware needed for include the following:

- Raspberry Pi 3 (recommended because of its decent computational abilities). A Raspberry Pi Zero may work, but would heat up a lot and run slower

- Raspberry Pi Camera Module V2

- 16GB Class 10 Micro-SD Card

- 5V-2A USB Power Supply

- A Router (or a mobile hotspot with internet access)

Part 1: Installing Dependencies & Building Binaries

Installing Dependencies

curl http://www.linux-projects.org/listing/uv4l_repo/lpkey.asc | sudo apt-key add -

sudo -- sh -c "echo deb http://www.linux-projects.org/listing/uv4l_repo/raspbian/stretch stretch main >> /etc/apt/sources.list"

sudo apt-get update

sudo apt-get install autoconf automake libtool

sudo apt-get install libleptonica-dev

sudo apt-get install libicu-dev libpango1.0-dev libcairo2-dev

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

sudo apt-get install virtualenvwrapper

sudo apt-get install liblog4cplus-dev

sudo apt-get install libcurl4-openssl-dev

sudo apt-get install v4l-utils libv4l-dev v4l2ucp

Building Leptonica

cd ~/leptonica1.74

./autobuild

./configure

sudo make

sudo make install

Building Tesseract-OCR

cd ~/tesseract

sudo apt-get install autoconf-archive

sudo chmod u+x autogen.sh

sudo ./autogen.sh

(it would show "All done" if successful)

./configure

(it would show "configuring is done" if successful)

sudo make

sudo ld config

export TESSDATA_PREFIX=</path/to/tessdata> (e.g export TESSDATA_PREFIX=/home/pi/ALPR/tessdata/tessdata-master)

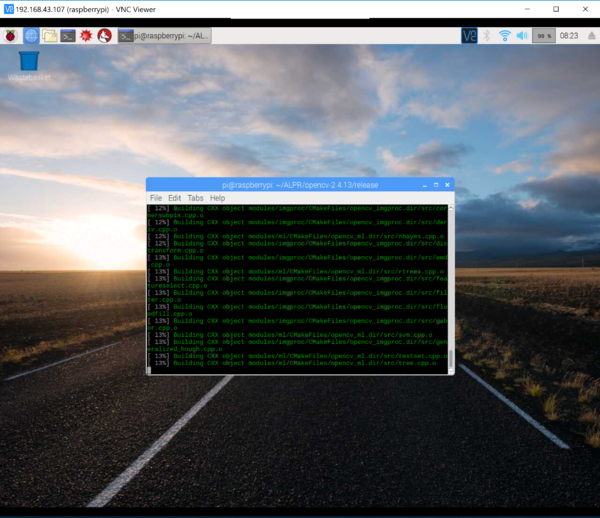

Building OpenCV-2.4.13

sudo wget https://github.com/Itseez/opencv/archive/2.4.13.zip

sudo unzip -q OpenCV-2.4.13.zip

cd /usr/local/src/opencv-2.4.13

sudo mkdir release

cd release

sudo cmake -DENABLE_PRECOMPILED_HEADERS=OFF -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D BUILD_NEW_PYTHON_SUPPORT=ON -D INSTALL_C_EXAMPLES=ON -D INSTALL_PYTHON_EXAMPLES=ON -D WITH_FFMPEG=OFF -D BUILD_EXAMPLES=ON ..

sudo make

sudo make install

Building OpenALPR

cd path/to/src

sudo nano CMakeLists.txt

add the following:

"SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")"

(this is to enable c++11 in CMake)

SET(OpenCV_DIR "/PATH/TO/OPENCV/release")

SET(Tesseract_DIR "/PATH/TO/TESSERACT/LIB")

SET(CMAKE_CXX_STANDARD 11)

SET(CMAKE_CXX_STANDARD_REQUIRED ON)

SET(CMAKE_CXX_EXTENSIONS OFF)

Manually replace string with std::string in both /usr/local/include/tesseract/unichar.h and /usr/local/include/tesseract/unicharset.h using the nano text editor, to get it to compile properly.

continue...

sudo cmake ./

sudo make

cd /usr/local/share

sudo mkdir openalpr

cd openalpr

sudo mkdir config/

sudo cp ~/openalpr/config /usr/local/share/openalpr/

sudo cp ~/openalpr/runtime_data /usr/local/share/openalpr/

sudo mkdir /etc/openalpr/

sudo cp /openalpr/src/config/* /etc/openalpr/

sudo cp sudo cp /openalpr/src/alpr* /usr/local/bin

sudo cp /usr/local/share/openalpr/config/openalpr.conf.defaults /etc/openalpr/openalpr.conf

sudo cp /usr/local/share/openalpr/config/alprd.conf.defaults /etc/openalpr/alprd.conf

Part 2: Test

In this part, we would test the alpr module both on images containing license plates and on live video streams.

Test on Images

To run a test, we first download a image of a car with a license plate and run the alpr module. This command basically works for images

sudo wget http://plates.openalpr.com/ea7the.jpg

sudo wget http://plates.openalpr.com/h786poj.jpg

alpr -c us ea7the.jpg

alpr -c eu h786poj.jpg

Test on Live Video Stream from Webcam

To use the raspberry pi 3 camera as a webcam device, edit the /etc/modules file:

sudo nano /etc/modules

append the line below to the file:

bcm2835-v4l2

save and exit (Ctrl + O then Ctrl + X)

edit the alprd daemon config:

cd /etc/openalpr

sudo nano alprd.conf

Below the line that says '[daemon]' add or edit the following lines to look like this:

; country determines the training dataset used for recognize plates. Valid values are us, eu

country = us

; text name identifier for this location

site_id = raspberry_pi

; Declare each stream on a separate line

; each unique stream should be defined as stream = [url]

stream = webcam

; topn is the number of possible plate character variations to report

topn = 10

; Determines whether images that contain plates should be stored to disk

store_plates = 0

store_plates_location = /var/lib/openalpr/plateimages/

; upload address is the destination to POST to

upload_data = 0

upload_address = http://localhost:9000/push/

Save the file, exit and reboot by typing:

shutdown -r now

When the restart is complete, re-open the terminal and type:

alprd -f

Put a license plate in front of the Raspberry Pi Camera and see if it displays the license plate in the terminal

Using OpenALPR with Python

sudo apt-get install beanstalkd

sudo apt-get install python-pip python-dev build-essential

sudo pip install PyYAML

sudo pip install beanstalkc

add a line to rc.local to start beanstalkd automatically on boot:

cd /etc

sudo nano rc.local

on the second to the last (penultimate) line, immediately before 'exit 0' add the following line to enable the beanstalkd run as a background process:

beanstalkd &

press Ctrl + O to save and Ctrl + X to exit

create a new script. let's call it lpr.py

sudo nano lpr.py

copy and paste the following:

1 #!/usr/bin/python

2 import beanstalkc

3 import datetime

4 import json

5 import os

6 import time

7 import csv

8

9

10 os.system("/home/anpr/openalpr-video/src/build/alprd -c eu -n 1 & ")

11 whitelist = ["your plate here"]

12 beanstalk = beanstalkc.Connection(host='localhost', port=11300)

13 beanstalk.watch('alprd')

14

15 def opengate():

16 print ("Opening gate.")

17 os.system("sudo echo -e '\xff\x01\x01' > /dev/ttyUSB0") #on

18 time.sleep(1)

19 os.system("sudo echo -e '\\xff\\x01\\x00' > /dev/ttyUSB0") #off

20 print ("Gate Opened")

21 time.sleep(45)

22 emptybeanstalk();

23

24 def emptybeanstalk():

25 print ("Emptying beanstalk.")

26 s = beanstalk.stats_tube("alprd")

27 ss = s['current-jobs-ready']

28 while (ss > 0):

29 bos = beanstalk.reserve()

30 bos.delete()

31 ss -= 1

32 beanstalk.watch('alprd')

33 print ("Beanstalk emptied.")

34 print ("Watching gate.")

35

36 def main():

37 emptybeanstalk();

38 while True:

39 job = beanstalk.reserve()

40 jobb = job.body

41 job.delete()

42 d = json.loads(jobb)

43 epoch = d['epoch_time']

44 realtime = datetime.datetime.fromtimestamp(epoch).strftime('%Y-%m-%d %H:%M:%S')

45 proctime = d['processing_time_ms']

46 plate = d['results'][0]['plate']

47 confid = d['results'][0]['confidence']

48 uuid = d['uuid']

49 plates = open('/home/anpr/plates.csv', 'a')

50 s = (str(realtime) + ", " + str(plate) + ", " + str(confid) + ", " + str(uuid) + "\n")

51 plates.write(s)

52 plates.close()

53 print s

54 if plate in whitelist:

55 opengate()

56

57 if __name__ == "__main__":

58 main()

Make the file executable:

sudo chmod u+x lpr.py

Execute your python script and test with license plates in front of the Rasspberry Pi Camera or Webcam

sudo ./lpr.py

NOTE:

- To expand the virtual memory, edit the /etc/dphys-swapfile and change CONF_SWAPSIZE=2048. Note its value before changing it and remember to return this to its default value as this is very harmful to the Raspberry Pi on the long run

- path/to/file means the directory that contains file